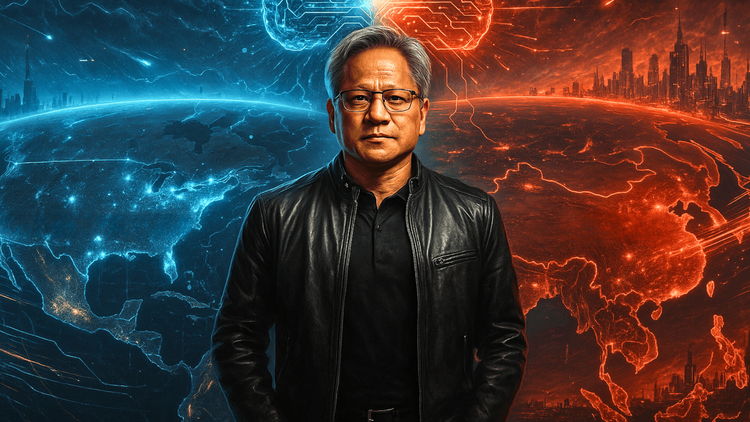

“We’re Not Far Ahead of China Overall”: Jensen Huang Sounds the Alarm on the Global AI Race

Inside the CNBC interview that redefined the AI conversation — Jensen Huang explains NVIDIA’s strategy, the race with China, and why AI’s next industrial revolution has only just begun.

NVIDIA’s Jensen Huang sat down with CNBC’s Squawk Box on October 8, 2025, and offered a rare, unfiltered look at what is powering the world’s most valuable company. The conversation ranged from OpenAI and xAI to energy strategy, China, immigration, and the economics of tokens. Every reference and quotation here is sourced to CNBC’s interview and companion clips on CNBC.com.

Huang confirmed that NVIDIA’s relationship with OpenAI has shifted from primarily cloud-channel sales to direct supply. He framed the move as a natural evolution of a partnership that began when NVIDIA delivered the first DGX system to OpenAI in 2016, as he told CNBC. What makes it consequential is the scope. He described NVIDIA’s offering as full AI infrastructure, not just GPUs, but CPUs, networking, switches, and software. That matters because the company is optimizing the entire stack to push down the cost of token generation. The tone was confident and specific, and the message to investors was straightforward. NVIDIA wants to be the single vendor that can ship a turnkey AI factory.

“I’m surprised they would give away 10%” on AMD’s OpenAI deal

Asked about AMD’s equity-sweetened arrangement with OpenAI, Huang called it imaginative and surprising, adding that he would not have expected a sizable stake to be granted before the next generation product ships. The contrast with NVIDIA’s approach is telling. NVIDIA has the option to invest alongside others in model builders but does not hinge supply on exclusivity, according to Huang’s remarks to CNBC. The strategy is to keep running faster across the system, not to win on one chip alone.

Huang’s most investor-sensitive line was about unit economics. He told CNBC that NVIDIA’s roadmap aims to slash token generation costs by an order of magnitude or more versus the prior generation. He argued that Moore’s law alone would imply incremental gains, while system-level optimization can deliver step changes. If that trajectory holds, it supports the thesis that AI demand expands as capability rises and costs fall, which in turn supports data-center capex and sustained platform share.